AI-DRIVEN CYBER DEFENSE

AI Security Starts Here: Practical Protection for Real Organizations

Generative AI and large language models are already embedded in your business. They also expand your attack surface. SecureTrust Cyber hardens your AI stack with the same discipline we apply to critical infrastructure: clear boundaries, verifiable controls, and continuous monitoring across users, data, and workloads.

- Secure LLMs, copilots, and in-house AI services against prompt injection, data leakage, and model abuse.

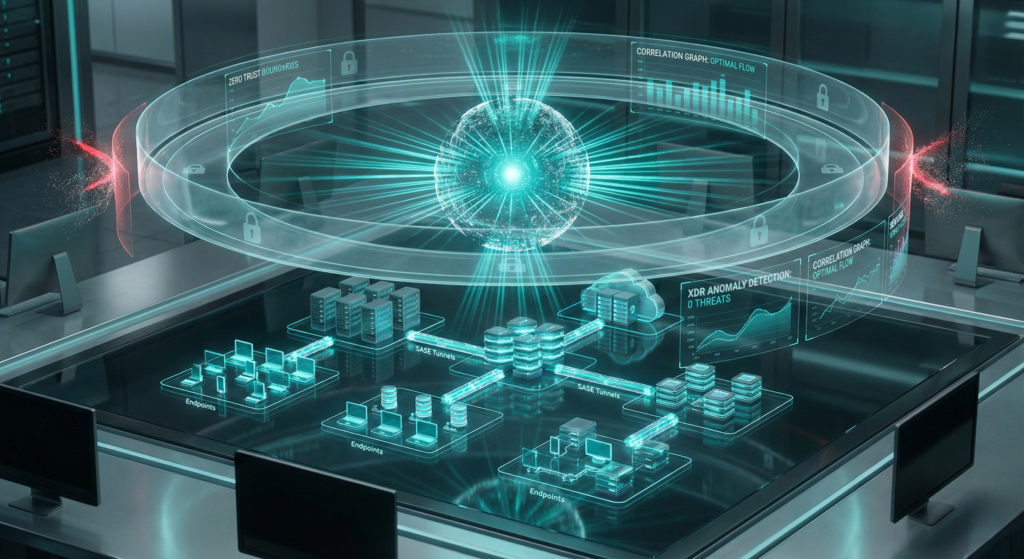

- Unify SASE, XDR, SIEM, and Zero Trust controls in a single ZTX Platform for AI and non-AI workloads.

- Align AI security with regulatory expectations (CMMC, NIST SP 800-171, HIPAA, ISO/IEC 42001) instead of bolting on point tools.

Why AI Security Has to Be a Priority Now

AI is not a lab experiment anymore. It is embedded in email, productivity suites, customer portals, development pipelines, and clinical or operational systems. That means:

- New attack paths – prompt injection, data exfiltration through chat interfaces, and compromised AI agents can bypass traditional perimeter controls.

- Data exposure risk – sensitive data (CUI, PHI, PCI, internal IP) can be unintentionally fed into third-party models or leaked through logs and telemetry.

- Regulatory scrutiny – regulators are explicitly calling out AI governance, model transparency, and auditability in updated guidance and emerging standards.

- Blended threats – threat actors are using AI to accelerate phishing, business email compromise, and social engineering at scale.

If AI security is not designed in from the beginning, you end up with an opaque, un-auditable system that quietly undermines your compliance program and incident response playbooks.

Practical Foundations for Securing AI

SecureTrust’s ZTX Platform and engineering team focus on five domains. Together, they turn AI from an unmanaged risk into a controlled, observable capability.

1. Strategy and Secure Design

- Define where AI is allowed to run, what data it can see, and which decisions must keep a human in the loop.

- Apply Zero Trust principles to AI pipelines: verify identity, device, and context at every step.

- Threat-model AI use cases, including prompt injection, data poisoning, model theft, and abuse of AI agents.

- Establish explicit \"kill switches\" for AI services and workflows when risk thresholds are exceeded.

2. Operations, Monitoring, and Resilience

- Ingest AI API logs, vector database activity, and agent actions into our SIEM/XDR stack for correlation and alerting.

- Run red-teaming and adversarial testing against LLM endpoints and AI-augmented applications.

- Track model versioning, training data lineage, and configuration changes for auditability.

- Design fail-safe modes when models are unavailable or return unsafe outputs.

3. Supply Chain and Model Stack Security

- Maintain a software bill of materials (SBOM) for AI models, libraries, datasets, and plug-ins.

- Restrict use of unverified models, open-source components, and community plug-ins in production AI flows.

- Apply SASE and DNS security to control where models can call out to and what external resources they can access.

- Continuously scan AI-supporting infrastructure (containers, Kubernetes, cloud workloads) for vulnerabilities and misconfigurations.

4. People, Governance, and Policy

- Define clear acceptable-use policies for internal and third-party AI tools.

- Train staff on what must never be entered into public or shared AI systems (CUI, PHI, non-public financials, credentials, etc.).

- Establish a cross-functional AI governance group (security, IT, legal, compliance, business owners).

- Document AI risk decisions, exceptions, and compensating controls for CMMC, HIPAA, and internal audit.

5. Access Control and Identity

- Enforce strong MFA and conditional access for all AI administration consoles and APIs.

- Segment AI workloads inside dedicated Zero Trust enclaves with least-privilege network access.

- Monitor for excessive privilege or autonomous behavior in AI agents and service accounts.

- Integrate identity events with XDR to quickly detect compromised AI administrator accounts.

What We Are Seeing in the Field

Our team continuously tracks how attackers are abusing AI in real environments. The patterns are clear and getting sharper.

- Indirect prompt injection via documents, web content, or emails that AI tools later ingest and obey.

- Poisoned training data that gradually shifts recommendations, scoring, or classification outcomes in the attacker’s favor.

- Deepfake voice and video used to bypass out-of-band verification procedures and social-engineer finance, HR, or executive assistants.

- Shadow AI where staff deploy unapproved copilots, browser extensions, and SaaS AI tools that quietly handle sensitive data.

- Model and API key theft from misconfigured repositories, CI/CD pipelines, and cloud storage.

The solution is not another dashboard. It is a disciplined security architecture that treats AI as part of your core attack surface, not as a special exemption.

Five Actions to Start Securing AI This Quarter

You do not need a multi-year transformation plan to get meaningful AI risk reduction. Start with focused, measurable steps.

- Inventory AI usage. Identify every AI tool in use – official and shadow – across departments and cloud environments.

- Define no-go data categories. Clearly state which data classes cannot be processed by public or third-party AI services.

- Enforce strong access control. Apply MFA, conditional access, and network segmentation to AI admin portals and APIs.

- Integrate AI telemetry into your SIEM/XDR. Start ingesting AI-related logs and security events into the ZTX Platform.

- Run an AI security assessment. Work with our engineers to map your AI attack surface, prioritize gaps, and build a remediation roadmap.

Work With Engineers, Not Just a Sales Deck

SecureTrust Cyber is led by security engineers with experience defending high-value, regulated environments. We bring a unified SASE, XDR, and SIEM stack, plus AI-focused threat modeling and governance, so your AI program can move fast without cutting corners.

- Engineer-led discovery and design sessions.

- Documented mappings to CMMC, NIST SP 800-171, HIPAA, and internal policies.

- Clear implementation plan using our ZTX Platform and your existing stack where it makes sense.